We've been doing a lot of stress testing of LH at Sprezz Towers to help a client with speed issues on large tables. As part of that we've created some 60 million row tables to play with combinations and permutations of differing indexing setups.

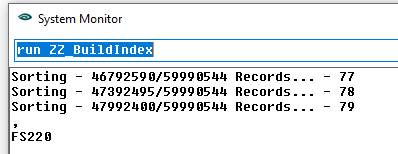

In so doing, we came across a situation where the index sort would get to 80% and then just exit with an FS220 error. (FS_REL_NEED_REBUILD_ERR$). Now to be honest, this was confusing to us as there wasn't a relational index on the table. So speaking to the good folks at Rev, it was clarified that this was the wrong error but for fairly obvious reasons we were the first to notice. After discussing further with them we came to the conclusion that it must be related to disk space.

So began an increasingly frustrating attempt to free up disk space (why oh why did I format my USB sticks with FAT32 and its 4GB file size limit?). Subdirectories were zipped, old files deleted until - finally we had the 40GB or so free that we needed.

We tried again - and again around the 80% mark the index rebuild just stopped. It didn't crash, it just stopped - having cleared out the %ALL.IDS% token in the ! file.

As V119 was suspected to be the culprit, we redirected the temporary sort file in the environment to the same disk as the data tables so that we could keep an eye on what happened.

It seems that the largest sort file that can be created is 2GB and that attempts to exceed this will fail. Note that this applies to both IDX_SETS1 and 2. This is a limitation of a 32 bit OS rather than OpenInsight, so can not easily be addressed in version 9.x.

Now it should be pointed out that this is a particularly extreme situation, as the row ids in this large table are 5 part and quite long. With a more normal key structure than the long complex one we were using, it is unlikely that this limit would be breached without having hundreds of millions of rows in the table... but it does mean that if you need to rebuild such large indexes you need to undertake the task in OI 10.1 where the issue has been resolved. Note also that this doesn't affect day to day use of the indexed table, normal additions and deletions will still update the index - this issue only affects a rebuild.

As a side note - if you DO want to do this in 10.x there is a major caveat. Indexing has been rewritten for 10 and works in a different way than 9. This means that out of the box, OI 9 and OI 10 have different indexing routines and are not compatible. Fortunately Rev have provided a way to deal with this.

All that we need to do is edit or create a record in SYSENV called CFG_RTI_UPDATE_INDEX. Set line 1 to RTI_UPDATE_INDEX_90 then save it, exit OI and restart. This will force 10 to use 9 indexing logic. We've been working a lot with indexes on large tables in 10 and we'd recommend using this setting in any case if you're working with large data volumes. By large tables, we are talking tens of millions of rows.

For the avoidance of doubt - if you are sharing data between OI 9 and OI 10 you MUST do this or you will experience issues.

| Making Databases Happen |

USA +1 267 238 3814

|

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home