We've increasingly been working with people who have a requirement to encode their data in OI using UTF-8 and with this increase we've seen many concomitant problems. This article sets out to correct some common misconceptions and to attempt to provide guidance for the successful implementation of a UTF-8 OI system.

To ensure that we're all singing from the same hymn sheet let's firstly consider what UTF-8 actually is. Historically we've been used to having up to 256 characters available to us with the first 127 being defined by the ASCII standard and the subsequent 127 being sort of defined by the ANSI standard. When recording unaccented data this meant that pretty much all of our characters fell into the ASCII range. However when we start to require accented characters we run into a problem. We only have 127 characters to play with and there are THOUSANDS of accented characters in use around the world. In addition there are alphabets using characters which we don't even recognise as letters - Cyrillic, Kanji, Arabic etc.

For this reason the concept of "code pages" was implemented. This effectively told the computer what glyph to display when a character over ASCII 127 is encountered. So if the code page was set to 437 (the original PC code page) an ASCII 142 would be displayed as Ä but if the code page was set to 1250 say (Western European) the same ASCII value would be displayed as the character Ž.

Code pages were normally adequate when you were dealing with a single language, but as applications became more international clashes started to occur. For example using the code page 437 you wouldn't be able to store a record which contained both Ž and Ä because the former character doesn't exist in that code page.

UTF-8 provided a solution to this issue as it allowed the encoding of pretty much any character by allowing a character to be represented by more than one byte - a multi-byte string. Most letters are encoded in 2 bytes, but extended diacritical marks and other complex characters can take 3 or even 4 bytes to encode. As ASCII existed as a standard, UTF-8 will see any character <= 127 as an ASCII character requiring only 1 byte. However the moment you go above 127 you are telling UTF-8 "this is a multi-byte character string!". How does it know how many bytes are in the string? It doesn't just guess or look for some kind of termination character, rather it looks at the significant bits of the first byte.

Taking you back to binary 101, an eight bit byte starts with the most significant bits and continues to the least significant bit. so the first bit represents 128, the next 64, the next 32 and so on down to the last bit representing 1. If a byte is 00000000 then this represents 0, it it is 11111111 then this represents 256 (128 + 64 + 32 + 16 + 8 + 4 + 2 + 1). So if the most significant bit is a 0, UTF-8 knows that this is a single byte character. Logically that leaves only 7 bits to represent the character, so we can have up to 127 values (128 including 0).

If the first three bits are 110 then UTF-8 knows that this is a 2 byte character and expects the 2nd byte to begin with the bits 10. If the first four bits are 1110 then UTF-8 knows that this is a 3 byte character and it expects the 2nd and 3rd bytes to begin with the bits 10. If the first five bits are 11110 then UTF-8 knows that this is a 4 byte character and expects the 2nd, 3rd and 4th bytes to begin with the bits 10.

If UTF8 hits an initial character that indicates this is a multi-byte string (ie anything > char 127) and the following character or characters DON'T match the rules above relating to the first two bits then UTF-8 won't know what to do and will just process the required number of characters and display a "?".

Now this has MAJOR repercussions when moving from ANSI to UTF8. Let's firstly consider those multi-byte identifiers.

In ANSI which characters start with 110? Well 5 bits can represent 0-31 so with a byte beginning 110 we can have 32 characters - being ÀÁÂÃÄÅÆÇÈÉÊËÌÍÎÏÐÑÒÓÔÕÖ×ØÙÚÛÜÝÞß. Which characters start with 1110? Well 4 bits can represent 0-15, so 16 characters being àáâãäåæçèéêëìíîï. Finally, which characters start with 11110? Well 3 bits can represent 0-7, so 8 characters.ðñòóôõö÷.

Now following on from this, you'll remember that in multi-byte characters the trailing bytes must begin with the bits 10 to be considered valid. Which characters start 10? Well there are 6 bits so 64 possible characters and those characters are€‚ƒ„…†‡ˆ‰Š‹ŒŽ‘’“”•–—˜™š›œžŸ ¡¢£¤¥¦§¨©ª«¬®¯°±²³´µ¶·¸¹º»¼½¾¿.

(From hereon in I am going to concentrate my explanations around a single sample character - the lowercase e-acute (é). This is purely to simplify the explanation. I could have chosen any valid UTF-8 character.)

Putting all of this together, let's look at a possible scenario. Imagine that in your ANSI OpenInsight data you stored the name "Beyoncé Giselle Knowles-Carter". Then one day you clicked the magic UTF-8 check box without first running an ANSI_UTF8 conversion.. The UTF-8 processor might get to the é (11101001) and say "Oooh look, it starts 1110 - this must be a 3 byte character - but hang on the next two characters are a space and a G - they don't start 10, so that isn't a valid multi-byte character. I'll just insert a question mark". And suddenly QueenB might become "Beyonc?iselle Knowles-Carter"

The chances of the following characters starting 10 are slim, so all of your accented characters might just get swapped out for question marks with some of the following bytes being eaten.

That's the theory, so let's see what actually happens...

Firstly with OI in ANSI mode, we'll create a row containing the string "Beyoncé Giselle Knowles-Carter" and we'll write a little program which does nothing but read the row so that we can look at it in the debugger. The debugger is great for looking at what is actually in the variable at a byte level rather than an a character level. The hex representation shows the bytes NOT the characters.

So firstly, in ANSI mode, the entered data

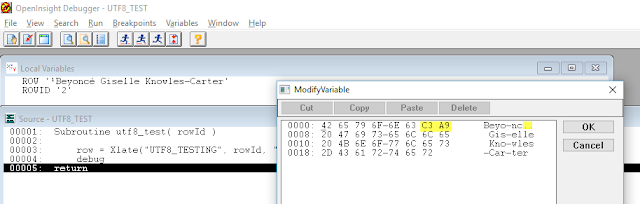

We can either enter the é by using Ctrl-AltGr-e or by using the numeric keypad and entering Alt-0233. Now we can run our little test program which simply Xlates the row and allows us to inspect the variable in the debugger :-

As you can see, the é character in hex is E9 (Char(233)).

Now time for a brief diversion. It's something that we might not think about on a day-to-day basis , because it's not relevant to us, but Windows doesn't actually use ANSI, it uses UTF-16. But we're SEEING the appropriate character. You'll be relieved to hear that it's not sorcery, the Presentation Server simply says "Windows wants UTF-16 so I'll call ANSI_UNICODE and convert the E9 into the Unicode equivalent for display purposes". Then when we want to save the row, the Presentation server reverses the process by calling UNICODE_ANSI to transform the multi-byte characters into their ANSI form.

Now let's put our OpenInsight into UTF8 mode and repeat the process, again using Ctrl-AltGr of Alt-0233.

Running our program again we see that this time, our é is represented by C3A9.

Whilst we're in UTF-8 mode, let's just go back and look at our row 1, because if the theory above is correct, we should see corrupted data.

Theory failure! The character is still displaying correctly! Let's double check what's actually there using our program again.

It's still an E9. But what happens if we save the row then run again?

The data has been saved with the UTF-8 replacement for E9.

This behaviour is not expected. Speaking to Revelation Software about this it seems that the logic flow that converts the UTF8 to Unicode is being a little more forgiving than it ought and allows the E9 to be processed as an é and converts it to the Unicode equivalent. On writing it converts Unicode to UTF8 and the correct UTF8 is written back.

This may seem like desirable behaviour but stop for a moment and think. When you are working at the engine level (for example, running a program), the conversion doesn't happen. So if you have a database containing a number of E9s and you switch to UTF-8 mode, any rows that haven't been displayed and saved will remain as E9s whilst any new rows, or re-saved rows will be C3A9s. To illustrate what might happen, I populated a test table with 52 records of forms of Beyoncé, 25 using UTF8 é, 25 using ANSI é and two using UTF8 ò. I added a Btree index and sorted the report by name.

You'll see quite obviously that these aren't the results you'd hope to see. We'd have the same issue when using BTREE.EXTRACT. Visually it seems we have 50 Beyoncés, but in reality...

The lookup predictably doesn't retrieve the ANSI values.

The question is though, how can we clean up our data if we have mixed ANSI and UTF8? The answer is, there's no magic button. Let's take our original string "Beyoncé Giselle Knowles-Carter" in both ANSI and UTF8 forms and go back to ANSI with OI. For illustration purposes, we'll try and clean up first the ANSI, then the UTF8. You can see in the image below that the ansi_utf8 routine has successfully converted the ANSI (convertedAnsi) to be equivalent to the UTF8. But you can further see that it has trashed the UTF8(convertedUtf8).

Putting our OI back in to UTF8 mode we can look at the data in the editor and see that line 4 is indeed garbled.

If you're in this unfortunate position then you're going to have to be pretty ingenious to clean up. In a perfect world you'd just go back to a pre-UTF8 copy of the data and convert first!

The obvious moral of the story is that the check-box ISN'T magical and if you're going to check it AND you have existing data above char(127) you MUST run an ANSI_UTF8 conversion first.

As a final coda to all of the above... it is worth noting that UTF-8 supports many locales and some character glyphs are repeated across the locales but at different code points. In other words a certain symbol might have a value of 200, 4000 and 6000. When you come to sort these characters will sort in different places. Normally this wouldn't be an issue BUT if your users are cutting and pasting data from different locales it can be a problem. Like with most things software it's fixable - but that's beyond the scope of this blog post.

| Making Databases Happen |

USA +1 267 238 3814

|

3 Comments:

Great article on a subject I know is elusive to many. Thanks for the contribution to the community with this.

By Don Bakke, At

14 October 2018 at 05:50

Don Bakke, At

14 October 2018 at 05:50

Thank you Don. Having spent a large chunk of time helping a client clean up a very messy database, we didn't want the knowledge to go to waste!

By Sprezz, At

15 October 2018 at 13:28

Sprezz, At

15 October 2018 at 13:28

Great article, thank you very much for posting it! Please allow me just a little note: when you say "... if it is 11111111 then this represents 256 (128 + 64 + 32 + 16 + 8 + 4 + 2 + 1)...", it is not completely correct. Binary 11111111 actually represents 255, or 256 values if you're including 0.

By Stefano, At

10 September 2019 at 10:18

Stefano, At

10 September 2019 at 10:18

Post a Comment

Subscribe to Post Comments [Atom]

<< Home